Cisco Certification Exam Prep Materials

Cisco CCNA Exam Prep Material Download

Cisco CCT Exam Prep Material Download

- Cisco 010-151 Dumps PDF

- Cisco 100-490 Dumps PDF

- Cisco 100-890 Dumps PDF

- Tips: Beginning February 10, the CCT Certification 500-150 FLDTEC v1.0 exam will replace the 100-490, 010-151, and 100-890 exams.

Cisco CyberOps Exam Prep Material Download

Cisco DevNet Exam Prep Material Download

Cisco CCNP Exam Prep Material Download

- Cisco 300-410 Dumps PDF

- Cisco 300-415 Dumps PDF

- Cisco 300-420 Dumps PDF

- Cisco 300-425 Dumps PDF

- Cisco 300-430 Dumps PDF

- Cisco 300-435 Dumps PDF

- Cisco 300-440 Dumps PDF

- Cisco 300-510 Dumps PDF

- Cisco 300-515 Dumps PDF

- Cisco 300-535 Dumps PDF

- Cisco 300-610 Dumps PDF

- Cisco 300-615 Dumps PDF

- Cisco 300-620 Dumps PDF

- Cisco 300-630 Dumps PDF

- Cisco 300-635 Dumps PDF

- Cisco 300-710 Dumps PDF

- Cisco 300-715 Dumps PDF

- Cisco 300-720 Dumps PDF

- Cisco 300-725 Dumps PDF

- Cisco 300-730 Dumps PDF

- Cisco 300-735 Dumps PDF

- Cisco 300-810 Dumps PDF

- Cisco 300-815 Dumps PDF

- Cisco 300-820 Dumps PDF

- Cisco 300-835 Dumps PDF

Cisco CCIE Exam Prep Material Download

- Cisco 350-401 Dumps PDF

- Cisco 350-501 Dumps PDF

- Cisco 350-601 Dumps PDF

- Cisco 350-701 Dumps PDF

- Cisco 350-801 Dumps PDF

Cisco CCDE Exam Prep Material Download

Cisco Other Exam Prep Material Download

- Cisco 500-052 Dumps PDF

- Cisco 500-210 Dumps PDF

- Cisco 500-220 Dumps PDF

- Cisco 500-420 Dumps PDF

- Cisco 500-442 Dumps PDF

- Cisco 500-444 Dumps PDF

- Cisco 500-470 Dumps PDF

- Cisco 500-490 Dumps PDF

- Cisco 500-560 Dumps PDF

- Cisco 500-710 Dumps PDF

- Cisco 700-150 Dumps PDF

- Cisco 700-750 Dumps PDF

- Cisco 700-760 Dumps PDF

- Cisco 700-765 Dumps PDF

- Cisco 700-805 Dumps PDF

- Cisco 700-821 Dumps PDF

- Cisco 700-826 Dumps PDF

- Cisco 700-846 Dumps PDF

- Cisco 700-905 Dumps PDF

- Cisco 820-605 Dumps PDF

Fortinet Exam Dumps

fortinet nse4_fgt-6.4 dumps (pdf + vce)

fortinet nse4_fgt-6.2 dumps (pdf + vce)

fortinet nse5_faz-6.4 dumps (pdf + vce)

fortinet nse5_faz-6.2 dumps (pdf + vce)

fortinet nse5_fct-6.2 dumps (pdf + vce)

fortinet nse5_fmg-6.4 dumps (pdf + vce)

fortinet nse5_fmg-6.2 dumps (pdf + vce)

fortinet nse6_fml-6.2 dumps (pdf + vce)

fortinet nse6_fnc-8.5 dumps (pdf + vce)

fortinet nse7_efw-6.4 dumps (pdf + vce)

fortinet nse7_efw-6.2 dumps (pdf + vce)

fortinet nse7_sac-6.2 dumps (pdf + vce)

fortinet nse7_sdw-6.4 dumps (pdf + vce)

fortinet nse8_811 dumps (pdf + vce)

Valid Microsoft DP-201 questions shared by Pass4itsure for helping to pass the Microsoft DP-201 exam! Get the newest Pass4itsure Microsoft DP-201 exam dumps with VCE and PDF here: https://www.pass4itsure.com/dp-201.html (186 Q&As Dumps).

[Free PDF] Microsoft DP-201 pdf Q&As https://drive.google.com/file/d/1EnK7K3qn-FIh3PhKIsPtOuL3Vc_A5YXz/view?usp=sharing

Suitable for DP-201 complete Microsoft learning pathway

The content is rich and diverse, and learning will not become boring. You can learn in multiple ways through the Microsoft DP-201 exam.

- Download

- Answer practice questions, the actual Microsoft DP-201 test

Microsoft DP-201 Designing an Azure Data Solution

Free Microsoft DP-201 dumps download

[PDF] Free Microsoft DP-201 dumps pdf download https://drive.google.com/file/d/1EnK7K3qn-FIh3PhKIsPtOuL3Vc_A5YXz/view?usp=sharing

Pass4itsure offers the latest Microsoft DP-201 practice test free of charge 1-13

QUESTION 1

You need to recommend a security solution to grant anonymous users permission to access the blobs in a specific

container only. What should you include in the recommendation?

A. access keys for the storage account

B. shared access signatures (SAS)

C. Role assignments

D. the public access level for the blobs service

Correct Answer: D

You can enable anonymous, public read access to a container and its blobs in Azure Blob storage. By doing so, you can

grant read-only access to these resources without sharing your account key, and without requiring a shared access

signature (SAS).

Public read access is best for scenarios where you want certain blobs to always be available for anonymous read

access.

References: https://docs.microsoft.com/en-us/azure/storage/blobs/storage-manage-access-to-resources

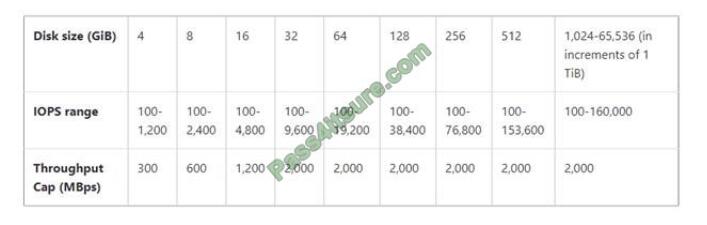

QUESTION 2

You need to design a solution to meet the SQL Server storage requirements for CONT_SQL3. Which type of disk

should you recommend?

A. Standard SSD Managed Disk

B. Premium SSD Managed Disk

C. Ultra SSD Managed Disk

Correct Answer: C

CONT_SQL3 requires an initial scale of 35000 IOPS. Ultra SSD Managed Disk Offerings

References: https://docs.microsoft.com/en-us/azure/virtual-machines/windows/disks-types

QUESTION 3

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains

a unique solution that might meet the stated goals. Some question sets might have more than one correct solution,

while

others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not

appear in the review screen.

You plan to store delimited text files in an Azure Data Lake Storage account that will be organized into department

folders.

You need to configure data access so that users see only the files in their respective department folder.

Solution: From the storage account, you disable a hierarchical namespace, and you use RBAC.

Does this meet the goal?

A. Yes

B. No

Correct Answer: B

Instead of RBAC use access control lists (ACLs).

Note: Azure Data Lake Storage implements an access control model that derives from HDFS, which in turn derives from

the POSIX access control model.

Blob container ACLs does not support the hierarchical namespace, so it must be disabled.

References:

https://docs.microsoft.com/en-us/azure/storage/blobs/data-lake-storage-known-issues

https://docs.microsoft.com/en-us/azure/data-lake-store/data-lake-store-access-control

QUESTION 4

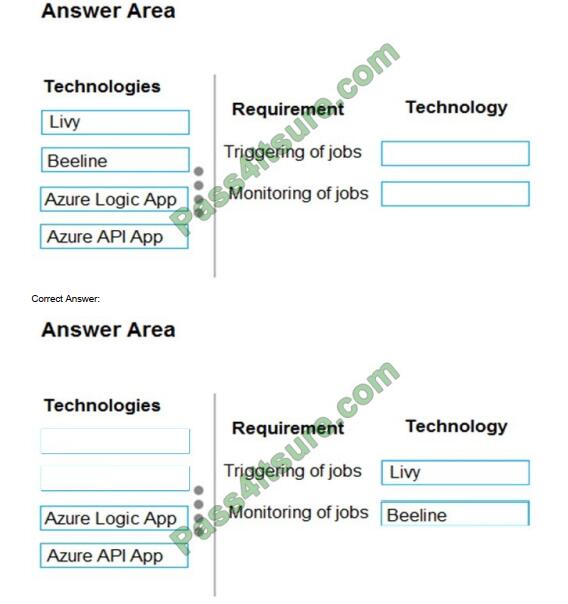

DRAG DROP

You are designing a Spark job that performs batch processing of daily web log traffic.

When you deploy the job in the production environment, it must meet the following requirements:

1.

Run once a day.

2.

Display status information on the company intranet as the job runs.

You need to recommend technologies for triggering and monitoring jobs.

Which technologies should you recommend? To answer, drag the appropriate technologies to the correct locations.

Each technology may be used once, more than once, or not at all. You may need to drag the split bar between panes or

scroll

to view content.

NOTE: Each correct selection is worth one point.

Select and Place:

Box 1: Livy

You can use Livy to run interactive Spark shells or submit batch jobs to be run on Spark.

Box 2: Beeline

Apache Beeline can be used to run Apache Hive queries on HDInsight. You can use Beeline with Apache Spark.

Note: Beeline is a Hive client that is included on the head nodes of your HDInsight cluster. Beeline uses JDBC to

connect to HiveServer2, a service hosted on your HDInsight cluster. You can also use Beeline to access Hive on

HDInsight

remotely over the internet.

References:

https://docs.microsoft.com/en-us/azure/hdinsight/spark/apache-spark-livy-rest-interface

https://docs.microsoft.com/en-us/azure/hdinsight/hadoop/apache-hadoop-use-hive-beeline

QUESTION 5

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains

a unique solution that might meet the stated goals. Some question sets might have more than one correct solution,

while

others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not

appear in the review screen.

You have an Azure SQL database that has columns. The columns contain sensitive Personally Identifiable Information

(PII) data.

You need to design a solution that tracks and stores all the queries executed against the PII data. You must be able to

review the data in Azure Monitor, and the data must be available for at least 45 days.

Solution: You add classifications to the columns that contain sensitive data. You turn on Auditing and set the audit log

destination to use Azure Blob storage.

Does this meet the goal?

A. Yes

B. No

Correct Answer: A

Auditing has been enhanced to log sensitivity classifications or labels of the actual data that were returned by the query.

This would enable you to gain insights on who is accessing sensitive data.

References: https://azure.microsoft.com/en-us/blog/announcing-public-preview-of-data-discovery-classification-formicrosoft-azure-sql-data-warehouse/

QUESTION 6

You are designing an Azure SQL Data Warehouse. You plan to load millions of rows of data into the data warehouse each day.

You must ensure that staging tables are optimized for data loading.

You need to design the staging tables.

What type of tables should you recommend?

A. Round-robin distributed table

B. Hash-distributed table

C. Replicated table

D. External table

Correct Answer: A

To achieve the fastest loading speed for moving data into a data warehouse table, load data into a staging table. Define

the staging table as a heap and use round-robin for the distribution option.

Incorrect:

Not B: Consider that loading is usually a two-step process in which you first load to a staging table and then insert the

data into a production data warehouse table. If the production table uses a hash distribution, the total time to load and

insert might be faster if you define the staging table with the hash distribution. Loading to the staging table takes longer,

but the second step of inserting the rows to the production table does not incur data movement across the distributions.

References:

https://docs.microsoft.com/en-us/azure/sql-data-warehouse/guidance-for-loading-data

QUESTION 7

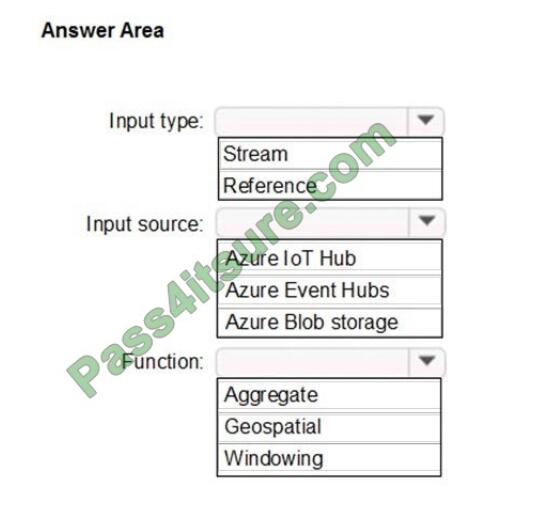

HOTSPOT

You plan to create a real-time monitoring app that alerts users when a device travels more than 200 meters away from a

designated location.

You need to design an Azure Stream Analytics job to process the data for the planned app. The solution must minimize

the amount of code developed and the number of technologies used.

What should you include in the Stream Analytics job? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

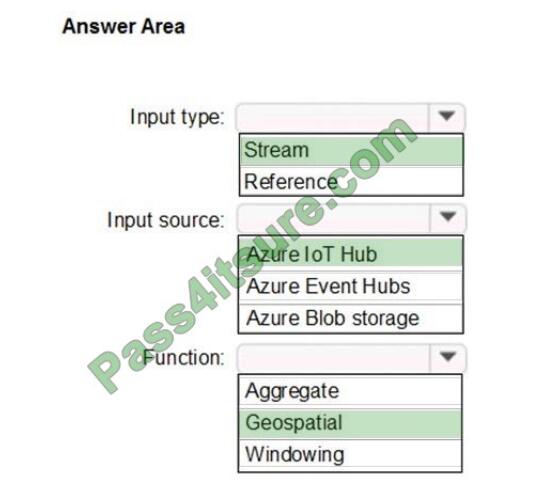

Correct Answer:

Input type: Stream

You can process real-time IoT data streams with Azure Stream Analytics.

Input source: Azure IoT Hub

In a real-world scenario, you could have hundreds of these sensors generating events as a stream. Ideally, a gateway

device would run code to push these events to Azure Event Hubs or Azure IoT Hubs.

Function: Geospatial

With built-in geospatial functions, you can use Azure Stream Analytics to build applications for scenarios such as fleet

management, ride sharing, connected cars, and asset tracking.

Reference:

https://docs.microsoft.com/en-us/azure/stream-analytics/stream-analytics-get-started-with-azure-stream-analytics-toprocess-data-from-iot-devices

https://docs.microsoft.com/en-us/azure/stream-analytics/geospatial-scenarios

QUESTION 8

HOTSPOT

A company has locations in North America and Europe. The company uses Azure SQL Database to support business

apps.

Employees must be able to access the app data in case of a region-wide outage. A multi-region availability solution is

needed with the following requirements:

1. Read-access to data in a secondary region must be available only in case of an outage of the primary region.

2. The Azure SQL Database compute and storage layers must be integrated and replicated together.

You need to design the multi-region high availability solution.

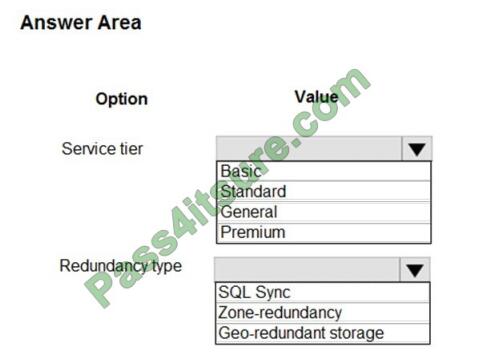

What should you recommend? To answer, select the appropriate values in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

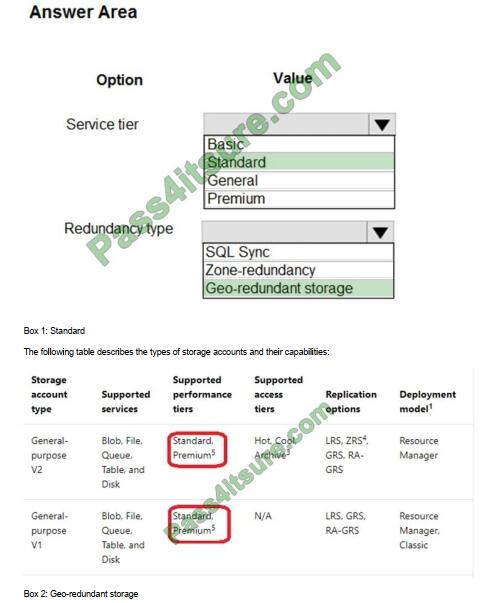

Correct Answer:

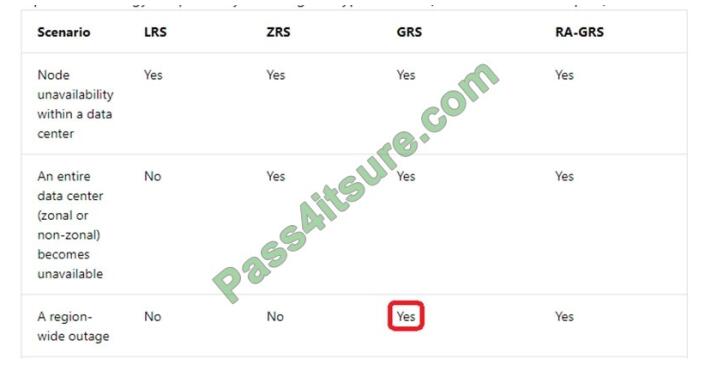

If your storage account has GRS enabled, then your data is durable even in the case of a complete regional outage or a

disaster in which the primary region isn\\’t recoverable.

Note: If you opt for GRS, you have two related options to choose from:

GRS replicates your data to another data center in a secondary region, but that data is available to be read only if

Microsoft initiates a failover from the primary to secondary region. Read-access geo-redundant storage (RA-GRS) is

based on

GRS. RA-GRS replicates your data to another data center in a secondary region, and also provides you with the option

to read from the secondary region. With RA-GRS, you can read from the secondary region regardless of whether

Microsoft initiates a failover from the primary to secondary region.

References:

https://docs.microsoft.com/en-us/azure/storage/common/storage-introduction

https://docs.microsoft.com/en-us/azure/storage/common/storage-redundancy-grs

QUESTION 9

You need to design the data loading pipeline for Planning Assistance.

What should you recommend? To answer, drag the appropriate technologies to the correct locations. Each technology

may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view

content.

NOTE: Each correct selection is worth one point.

Hot Area:

Box 1: SqlSink Table

Sensor data must be stored in a Cosmos DB named treydata in a collection named SensorData

Box 2: Cosmos Bulk Loading

Use Copy Activity in Azure Data Factory to copy data from and to Azure Cosmos DB (SQL API).

Scenario: Data from the Sensor Data collection will automatically be loaded into the Planning Assistance database once

a week by using Azure Data Factory. You must be able to manually trigger the data load process.

Data used for Planning Assistance must be stored in a sharded Azure SQL Database.

References:

https://docs.microsoft.com/en-us/azure/data-factory/connector-azure-cosmos-db

QUESTION 10

You have an Azure Storage account.

You plan to copy one million image files to the storage account.

You plan to share the files with an external partner organization. The partner organization will analyze the files during

the next year.

You need to recommend an external access solution for the storage account. The solution must meet the following

requirements:

Ensure that only the partner organization can access the storage account.

Ensure that access of the partner organization is removed automatically after 365 days.

What should you include in the recommendation?

A. shared keys

B. Azure Blob storage lifecycle management policies

C. Azure policies

D. shared access signature (SAS)

Correct Answer: D

A shared access signature (SAS) is a URI that grants restricted access rights to Azure Storage resources. You can

provide a shared access signature to clients who should not be trusted with your storage account key but to whom you

wish to delegate access to certain storage account resources. By distributing a shared access signature URI to these

clients, you can grant them access to a resource for a specified period of time, with a specified set of permissions.

Reference: https://docs.microsoft.com/en-us/rest/api/storageservices/delegate-access-with-shared-access-signature

QUESTION 11

You are designing an Azure Databricks cluster that runs user-defined local processes. You need to recommend a

cluster configuration that meets the following requirements:

1.

Minimize query latency

2.

Reduce overall costs

3.

Maximize the number of users that can run queries on the cluster at the same time. Which cluster type should you

recommend?

A. Standard with Autoscaling

B. High Concurrency with Auto Termination

C. High Concurrency with Autoscaling

D. Standard with Auto Termination

Correct Answer: C

High Concurrency clusters allow multiple users to run queries on the cluster at the same time, while minimizing query

latency. Autoscaling clusters can reduce overall costs compared to a statically-sized cluster.

Incorrect Answers:

A, D: Standard clusters are recommended for a single user.

References:

https://docs.azuredatabricks.net/user-guide/clusters/create.html https://docs.azuredatabricks.net/userguide/clusters/high-concurrency.html#high-concurrency https://docs.azuredatabricks.net/userguide/clusters/terminate.html https://docs.azuredatabricks.net/user-guide/clusters/sizing.html#enable-and-configureautoscaling

QUESTION 12

Your company is an online retailer that can have more than 100 million orders during a 24-hour period, 95 percent of

which are placed between 16:30 and 17:00. All the orders are in US dollars. The current product line contains the

following three item categories:

1.

Games with 15,123 items

2.

Books with 35,312 items

3.

Pens with 6,234 items

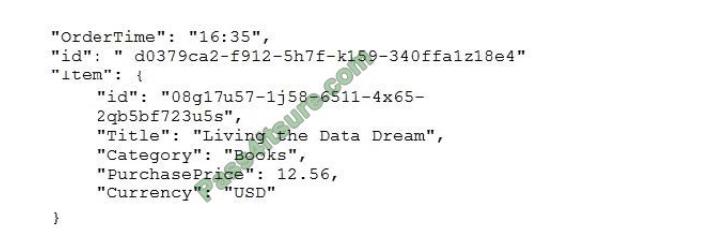

You are designing an Azure Cosmos DB data solution for a collection named Orders Collection. The following

documents is a typical order in Orders Collection.

A. Item/Category

B. OrderTime

C. Item/Currency

D. Item/id

Correct Answer: A

Choose a partition key that has a wide range of values and access patterns that are evenly spread across logical

partitions. This helps spread the data and the activity in your container across the set of logical partitions, so that

resources for data storage and throughput can be distributed across the logical partitions.

Choose a partition key that spreads the workload evenly across all partitions and evenly over time. Your choice of

partition key should balance the need for efficient partition queries and transactions against the goal of distributing items

across multiple partitions to achieve scalability.

Candidates for partition keys might include properties that appear frequently as a filter in your queries. Queries can be

efficiently routed by including the partition key in the filter predicate.

References: https://docs.microsoft.com/en-us/azure/cosmos-db/partitioning-overview#choose-partitionkey

QUESTION 13

A company has an application that uses Azure SQL Database as the data store.

The application experiences a large increase in activity during the last month of each year.

You need to manually scale the Azure SQL Database instance to account for the increase in data write operations.

Which scaling method should you recommend?

A. Scale up by using elastic pools to distribute resources.

B. Scale out by sharding the data across databases.

C. Scale up by increasing the database throughput units.

Correct Answer: C

As of now, the cost of running an Azure SQL database instance is based on the number of Database Throughput Units

(DTUs) allocated for the database. When determining the number of units to allocate for the solution, a major

contributing

the factor is to identify what processing power is needed to handle the volume of expected requests.

Running the statement to upgrade/downgrade your database takes a matter of seconds.

Incorrect Answers:

A: Elastic pools are used if there are two or more databases.

References: https://www.skylinetechnologies.com/Blog/Skyline-Blog/August_2017/dynamically-scale-azure-sqldatabase

Summarize:

[Q1-Q13] Free Microsoft DP-201 pdf download https://drive.google.com/file/d/1EnK7K3qn-FIh3PhKIsPtOuL3Vc_A5YXz/view?usp=sharing

Share all the resources: Latest Microsoft DP-201 practice questions, latest Microsoft DP-201 pdf dumps. The latest updated Microsoft DP-201 dumps https://www.pass4itsure.com/dp-201.html Study hard and practices a lot. This will help you prepare for the Microsoft DP-201 exam. Good luck!

Related

Discover more from Exampass: Collection of Cisco (CCNA, CCNP, Meraki Solutions Specialist, CCDP...) exam questions and answers from Pass4itsure

Subscribe to get the latest posts sent to your email.

Written by Ralph K. Merritt

We are here to help you study for Cisco certification exams. We know that the Cisco series (CCNP, CCDE, CCIE, CCNA, DevNet, Special and other certification exams are becoming more and more popular, and many people need them. In this era full of challenges and opportunities, we are committed to providing candidates with the most comprehensive and comprehensive Accurate exam preparation resources help them successfully pass the exam and realize their career dreams. The Exampass blog we established is based on the Pass4itsure Cisco exam dump platform and is dedicated to collecting the latest exam resources and conducting detailed classification. We know that the most troublesome thing for candidates during the preparation process is often the massive amount of learning materials and information screening. Therefore, we have prepared the most valuable preparation materials for candidates to help them prepare more efficiently. With our rich experience and deep accumulation in Cisco certification, we provide you with the latest PDF information and the latest exam questions. These materials not only include the key points and difficulties of the exam, but are also equipped with detailed analysis and question-answering techniques, allowing candidates to deeply understand the exam content and master how to answer questions. Our ultimate goal is to help you study for various Cisco certification exams, so that you can avoid detours in the preparation process and get twice the result with half the effort. We believe that through our efforts and professional guidance, you will be able to easily cope with exam challenges, achieve excellent results, and achieve both personal and professional improvement. In your future career, you will be more competitive and have broader development space because of your Cisco certification.

Categories

2025 Microsoft Top 20 Certification Materials

- Microsoft Azure Administrator –> az-104 dumps

- Microsoft Azure Fundamentals –> az-900 dumps

- Data Engineering on Microsoft Azure –> dp-203 dumps

- Developing Solutions for Microsoft Azure –> az-204 dumps

- Microsoft Power Platform Developer –> pl-400 dumps

- Designing and Implementing a Microsoft Azure AI Solution –> ai-102 dumps

- Microsoft Power BI Data Analyst –> pl-300 dumps

- Designing and Implementing Microsoft DevOps Solutions –> az-400 dumps

- Microsoft Azure Security Technologies –> az-500 dumps

- Microsoft Cybersecurity Architect –> sc-100 dumps

- Microsoft Dynamics 365 Fundamentals Customer Engagement Apps (CRM) –> mb-910 dumps

- Microsoft Dynamics 365 Fundamentals Finance and Operations Apps (ERP) –> mb-920 dumps

- Microsoft Azure Data Fundamentals –> dp-900 dumps

- Microsoft 365 Fundamentals –> ms-900 dumps

- Microsoft Security Compliance and Identity Fundamentals –> sc-900 dumps

- Microsoft Azure AI Fundamentals –> ai-900 dumps

- Microsoft Dynamics 365: Finance and Operations Apps Solution Architect –> mb-700 dumps

- Microsoft 365 Certified: Enterprise Administrator Expert –> ms-102 dumps

- Microsoft 365 Certified: Collaboration Communications Systems Engineer Associate –> ms-721 dumps

- Endpoint Administrator Associate –> md-102 dumps