Cisco Certification Exam Prep Materials

Cisco CCNA Exam Prep Material Download

Cisco CCT Exam Prep Material Download

- Cisco 010-151 Dumps PDF

- Cisco 100-490 Dumps PDF

- Cisco 100-890 Dumps PDF

- Tips: Beginning February 10, the CCT Certification 500-150 FLDTEC v1.0 exam will replace the 100-490, 010-151, and 100-890 exams.

Cisco CyberOps Exam Prep Material Download

Cisco DevNet Exam Prep Material Download

Cisco CCNP Exam Prep Material Download

- Cisco 300-410 Dumps PDF

- Cisco 300-415 Dumps PDF

- Cisco 300-420 Dumps PDF

- Cisco 300-425 Dumps PDF

- Cisco 300-430 Dumps PDF

- Cisco 300-435 Dumps PDF

- Cisco 300-440 Dumps PDF

- Cisco 300-510 Dumps PDF

- Cisco 300-515 Dumps PDF

- Cisco 300-535 Dumps PDF

- Cisco 300-610 Dumps PDF

- Cisco 300-615 Dumps PDF

- Cisco 300-620 Dumps PDF

- Cisco 300-630 Dumps PDF

- Cisco 300-635 Dumps PDF

- Cisco 300-710 Dumps PDF

- Cisco 300-715 Dumps PDF

- Cisco 300-720 Dumps PDF

- Cisco 300-725 Dumps PDF

- Cisco 300-730 Dumps PDF

- Cisco 300-735 Dumps PDF

- Cisco 300-810 Dumps PDF

- Cisco 300-815 Dumps PDF

- Cisco 300-820 Dumps PDF

- Cisco 300-835 Dumps PDF

Cisco CCIE Exam Prep Material Download

- Cisco 350-401 Dumps PDF

- Cisco 350-501 Dumps PDF

- Cisco 350-601 Dumps PDF

- Cisco 350-701 Dumps PDF

- Cisco 350-801 Dumps PDF

Cisco CCDE Exam Prep Material Download

Cisco Other Exam Prep Material Download

- Cisco 500-052 Dumps PDF

- Cisco 500-210 Dumps PDF

- Cisco 500-220 Dumps PDF

- Cisco 500-420 Dumps PDF

- Cisco 500-442 Dumps PDF

- Cisco 500-444 Dumps PDF

- Cisco 500-470 Dumps PDF

- Cisco 500-490 Dumps PDF

- Cisco 500-560 Dumps PDF

- Cisco 500-710 Dumps PDF

- Cisco 700-150 Dumps PDF

- Cisco 700-750 Dumps PDF

- Cisco 700-760 Dumps PDF

- Cisco 700-765 Dumps PDF

- Cisco 700-805 Dumps PDF

- Cisco 700-821 Dumps PDF

- Cisco 700-826 Dumps PDF

- Cisco 700-846 Dumps PDF

- Cisco 700-905 Dumps PDF

- Cisco 820-605 Dumps PDF

Fortinet Exam Dumps

fortinet nse4_fgt-6.4 dumps (pdf + vce)

fortinet nse4_fgt-6.2 dumps (pdf + vce)

fortinet nse5_faz-6.4 dumps (pdf + vce)

fortinet nse5_faz-6.2 dumps (pdf + vce)

fortinet nse5_fct-6.2 dumps (pdf + vce)

fortinet nse5_fmg-6.4 dumps (pdf + vce)

fortinet nse5_fmg-6.2 dumps (pdf + vce)

fortinet nse6_fml-6.2 dumps (pdf + vce)

fortinet nse6_fnc-8.5 dumps (pdf + vce)

fortinet nse7_efw-6.4 dumps (pdf + vce)

fortinet nse7_efw-6.2 dumps (pdf + vce)

fortinet nse7_sac-6.2 dumps (pdf + vce)

fortinet nse7_sdw-6.4 dumps (pdf + vce)

fortinet nse8_811 dumps (pdf + vce)

Where do I find a DP-200 PDF or any dump to download? Here you can easily get the latest Microsoft Certifications DP-200 exam dumps and DP-200 pdf! We’ve compiled the latest Microsoft DP-200 exam questions and answers to help you save most of your time. Microsoft DP-200 exam “Implementing an Azure Data Solution” https://www.pass4itsure.com/dp-200.html (Q&As:86). All exam dump! Guaranteed to pass for the first time!

Microsoft Certifications DP-200 Exam pdf

[PDF] Free Microsoft DP-200 pdf dumps download from Google Drive: https://drive.google.com/open?id=1lJNE54_9AAyU9kzPI_8NR-PPFqNYM7ys

Related Microsoft Certifications Exam pdf

[PDF] Free Microsoft DP-201 pdf dumps download from Google Drive: https://drive.google.com/open?id=1voG3cYhKFklJuG3ZSghQ98UaUGLlj3MN

[PDF] Free Microsoft MD-100 pdf dumps download from Google Drive: https://drive.google.com/open?id=1s1Iy9Fx7esWTBKip_3ZweRQP2xWQIPoy

Microsoft exam certification information

Exam DP-200: Implementing an Azure Data Solution – Microsoft: https://www.microsoft.com/en-us/learning/exam-dp-200.aspx

Candidates for this exam are Microsoft Azure data engineers who collaborate with business stakeholders to identify and meet the

data requirements to implement data solutions that use Azure data services.

Azure data engineers are responsible for data-related tasks that include provisioning data storage services, ingesting streaming and batch data, transforming data, implementing security requirements, implementing data retention policies, identifying performance bottlenecks, and accessing external data sources.

Skills measured

- Implement data storage solutions (40-45%)

- Manage and develop data processing (25-30%)

- Monitor and optimize data solutions (30-35%)

Microsoft Certified: Azure Data Engineer Associate:https://www.microsoft.com/en-us/learning/azure-data-engineer.aspx

Azure Data Engineers design and implement the management, monitoring, security, and privacy of data using the full stack of Azure data services to satisfy business needs. Required exams: Exam DP-201

Microsoft Certifications DP-200 Online Exam Practice Questions

QUESTION 1

You develop data engineering solutions for a company.

A project requires the deployment of data to Azure Data Lake Storage.

You need to implement role-based access control (RBAC) so that project members can manage the Azure Data Lake

Storage resources.

Which three actions should you perform? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

A. Assign Azure AD security groups to Azure Data Lake Storage.

B. Configure end-user authentication for the Azure Data Lake Storage account.

C. Configure service-to-service authentication for the Azure Data Lake Storage account.

D. Create security groups in Azure Active Directory (Azure AD) and add project members.

E. Configure access control lists (ACL) for the Azure Data Lake Storage account.

Correct Answer: ADE

QUESTION 2

You develop data engineering solutions for a company.

You need to ingest and visualize real-time Twitter data by using Microsoft Azure.

Which three technologies should you use? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

A. Event Grid topic

B. Azure Stream Analytics Job that queries Twitter data from an Event Hub

C. Azure Stream Analytics Job that queries Twitter data from an Event Grid

D. Logic App that sends Twitter posts which have target keywords to Azure

E. Event Grid subscription

F. Event Hub instance

Correct Answer: BDF

You can use Azure Logic apps to send tweets to an event hub and then use a Stream Analytics job to read from event

hub and send them to PowerBI.

References:

https://community.powerbi.com/t5/Integrations-with-Files-and/Twitter-streaming-analytics-step-by-step/td-p/9594

QUESTION 3

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains

a unique solution that might meet the stated goals. Some questions sets might have more than one correct solution,

while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not

appear in the review screen.

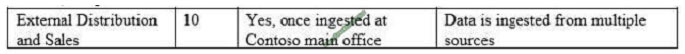

You need setup monitoring for tiers 6 through 8.

What should you configure?

A. extended events for average storage percentage that emails data engineers

B. an alert rule to monitor CPU percentage in databases that emails data engineers

C. an alert rule to monitor CPU percentage in elastic pools that emails data engineers

D. an alert rule to monitor storage percentage in databases that emails data engineers

E. an alert rule to monitor storage percentage in elastic pools that emails data engineers

Correct Answer: E

Scenario:

Tiers 6 through 8 must have unexpected resource storage usage immediately reported to data engineers.

Tier 3 and Tier 6 through Tier 8 applications must use database density on the same server and Elastic pools in a cost-

effective manner.

QUESTION 4

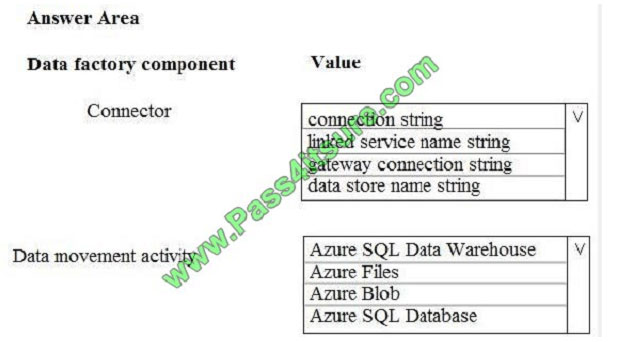

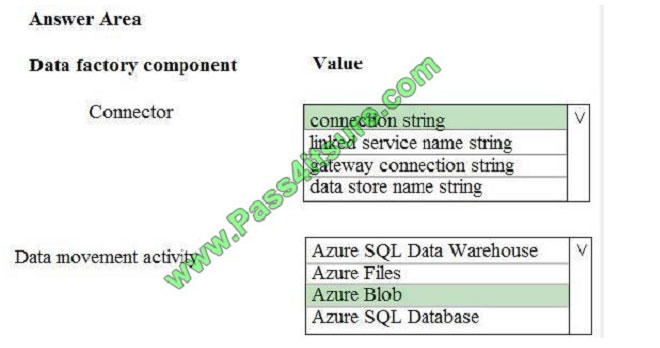

You need set up the Azure Data Factory JSON definition for Tier 10 data.

What should you use? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

Correct Answer:

Box 1: Connection String

To use storage account key authentication, you use the ConnectionString property, which xpecify the information

needed to connect to Blobl Storage.

Mark this field as a SecureString to store it securely in Data Factory. You can also put account key in Azure Key Vault

and pull the accountKey configuration out of the connection string.

Box 2: Azure Blob

Tier 10 reporting data must be stored in Azure Blobs

References: https://docs.microsoft.com/en-us/azure/data-factory/connector-azure-blob-storage

QUESTION 5

You need to process and query ingested Tier 9 data.

Which two options should you use? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

A. Azure Notification Hub

B. Transact-SQL statements

C. Azure Cache for Redis

D. Apache Kafka statements

E. Azure Event Grid

F. Azure Stream Analytics

Correct Answer: EF

Explanation:

Event Hubs provides a Kafka endpoint that can be used by your existing Kafka based applications as an alternative to

running your own Kafka cluster.

You can stream data into Kafka-enabled Event Hubs and process it with Azure Stream Analytics, in the following steps:

Create a Kafka enabled Event Hubs namespace.

Create a Kafka client that sends messages to the event hub.

Create a Stream Analytics job that copies data from the event hub into an Azure blob storage.

Scenario:

Tier 9 reporting must be moved to Event Hubs, queried, and persisted in the same Azure region as the company’s main

office

References: https://docs.microsoft.com/en-us/azure/event-hubs/event-hubs-kafka-stream-analytics

QUESTION 6

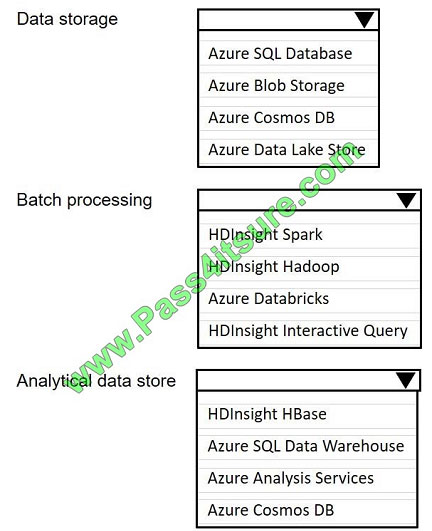

You are developing a solution using a Lambda architecture on Microsoft Azure.

The data at test layer must meet the following requirements:

Data storage:

-Serve as a repository (or high volumes of large files in various formats.

-Implement optimized storage for big data analytics workloads.

-Ensure that data can be organized using a hierarchical structure. Batch processing:

-Use a managed solution for in-memory computation processing.

-Natively support Scala, Python, and R programming languages.

-Provide the ability to resize and terminate the cluster automatically. Analytical data store:

-Support parallel processing.

-Use columnar storage.

-Support SQL-based languages. You need to identify the correct technologies to build the Lambda architecture. Which

technologies should you use? To answer, select the appropriate options in the answer area NOTE: Each correct

selection is worth one point.

Hot Area:

Correct Answer:

QUESTION 7

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains

a unique solution. Determine whether the solution meets the stated goals.

You develop a data ingestion process that will import data to a Microsoft Azure SQL Data Warehouse. The data to be

ingested resides in parquet files stored in an Azure Data lake Gen 2 storage account.

You need to load the data from the Azure Data Lake Gen 2 storage account into the Azure SQL Data Warehouse.

Solution;

1.

Create an external data source pointing to the Azure Data Lake Gen 2 storage account.

2.

Create an external tile format and external table using the external data source.

3.

Load the data using the CREATE TABLE AS SELECT statement. Does the solution meet the goal?

A. Yes

B. No

Correct Answer: A

QUESTION 8

You manage a process that performs analysis of daily web traffic logs on an HDInsight cluster. Each of 250 web servers

generates approximately gigabytes (GB) of log data each day. All log data is stored in a single folder in Microsoft Azure

Data Lake Storage Gen 2.

You need to improve the performance of the process.

Which two changes should you make? Each correct answer presents a complete solution.

NOTE: Each correct selection is worth one point.

A. Combine the daily log files for all servers into one file

B. Increase the value of the mapreduce.map.memory parameter

C. Move the log files into folders so that each day\\’s logs are in their own folder

D. Increase the number of worker nodes

E. Increase the value of the hive.tez.container.size parameter

Correct Answer: AC

A: Typically, analytics engines such as HDInsight and Azure Data Lake Analytics have a per-file overhead. If you store

your data as many small files, this can negatively affect performance. In general, organize your data into larger sized

files for better performance (256MB to 100GB in size). Some engines and applications might have trouble efficiently

processing files that are greater than 100GB in size.

C: For Hive workloads, partition pruning of time-series data can help some queries read only a subset of the data which

improves performance.

Those pipelines that ingest time-series data, often place their files with a very structured naming for files and folders.

Below is a very common example we see for data that is structured by date:

\DataSet\YYYY\MM\DD\datafile_YYYY_MM_DD.tsv

Notice that the datetime information appears both as folders and in the filename.

References:

https://docs.microsoft.com/en-us/azure/storage/blobs/data-lake-storage-performance-tuning-guidance

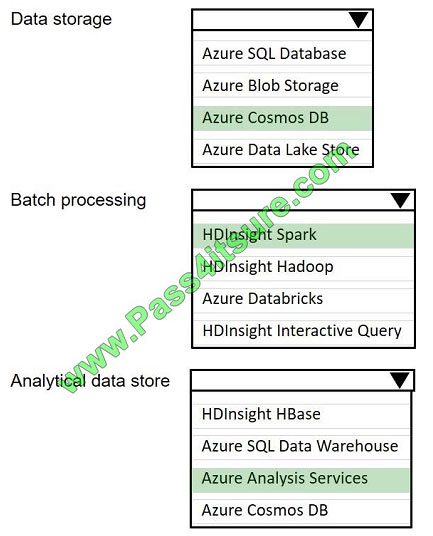

QUESTION 9

A company builds an application to allow developers to share and compare code. The conversations, code snippets,

and links shared by people in the application are stored in a Microsoft Azure SQL Database instance. The application

allows for searches of historical conversations and code snippets.

When users share code snippets, the code snippet is compared against previously share code snippets by using a

combination of Transact-SQL functions including SUBSTRING, FIRST_VALUE, and SQRT. If a match is found, a link to

the match is added to the conversation.

Customers report the following issues: Delays occur during live conversations A delay occurs before matching links

appear after code snippets are added to conversations

You need to resolve the performance issues.

Which technologies should you use? To answer, drag the appropriate technologies to the correct issues. Each

technology may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll

to view content.

NOTE: Each correct selection is worth one point.

Select and Place:

Correct Answer:

Box 1: memory-optimized table

In-Memory OLTP can provide great performance benefits for transaction processing, data ingestion, and transient data

scenarios.

Box 2: materialized view

To support efficient querying, a common solution is to generate, in advance, a view that materializes the data in a format

suited to the required results set. The Materialized View pattern describes generating prepopulated views of data in

environments where the source data isn\\’t in a suitable format for querying, where generating a suitable query is

difficult, or where query performance is poor due to the nature of the data or the data store.

These materialized views, which only contain data required by a query, allow applications to quickly obtain the

information they need. In addition to joining tables or combining data entities, materialized views can include the current

values of

calculated columns or data items, the results of combining values or executing transformations on the data items, and

values specified as part of the query. A materialized view can even be optimized for just a single query.

References:

https://docs.microsoft.com/en-us/azure/architecture/patterns/materialized-view

QUESTION 10

Note: This question is part of series of questions that present the same scenario. Each question in the series contain a

unique solution. Determine whether the solution meets the stated goals.

You develop a data ingestion process that will import data to a Microsoft Azure SQL Data Warehouse. The data to be

ingested resides in parquet files stored in an Azure Data Lake Gen 2 storage account.

You need to load the data from the Azure Data Lake Gen 2 storage account into the Azure SQL Data Warehouse.

Solution:

1.

Use Azure Data Factory to convert the parquet files to CSV files

2.

Create an external data source pointing to the Azure storage account

3.

Create an external file format and external table using the external data source

4.

Load the data using the INSERT…SELECT statement Does the solution meet the goal?

A. Yes

B. No

Correct Answer: B

There is no need to convert the parquet files to CSV files.

You load the data using the CREATE TABLE AS SELECT statement.

References:

https://docs.microsoft.com/en-us/azure/sql-data-warehouse/sql-data-warehouse-load-from-azure-data-lake-store

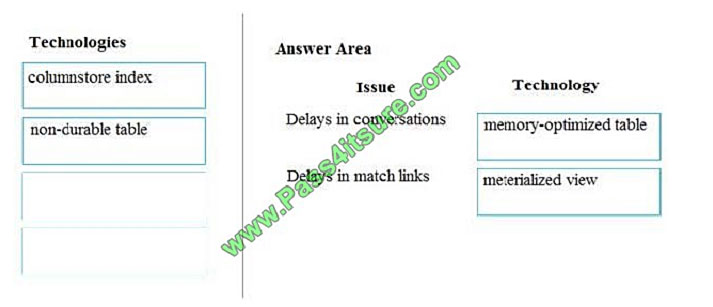

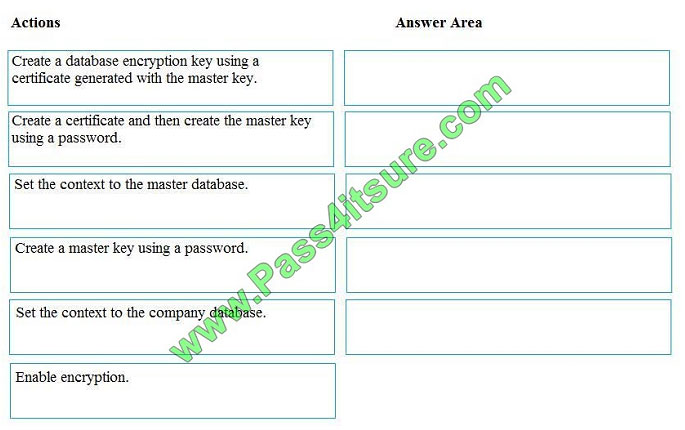

QUESTION 11

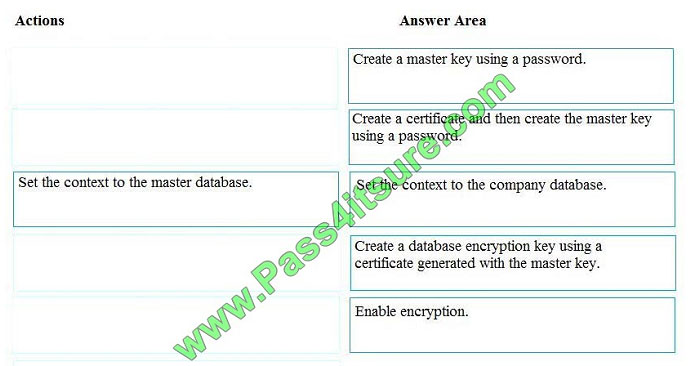

You manage security for a database that supports a line of business application.

Private and personal data stored in the database must be protected and encrypted.

You need to configure the database to use Transparent Data Encryption (TDE).

Which five actions should you perform in sequence? To answer, select the appropriate actions from the list of actions to

the answer area and arrange them in the correct order.

Select and Place:

Correct Answer:

Step 1: Create a master key

Step 2: Create or obtain a certificate protected by the master key

Step 3: Set the context to the company database

Step 4: Create a database encryption key and protect it by the certificate

Step 5: Set the database to use encryption

Example code: USE master; GO CREATE MASTER KEY ENCRYPTION BY PASSWORD = \\’\\’; go CREATE

CERTIFICATE MyServerCert WITH SUBJECT = \\’My DEK Certificate\\’; go USE AdventureWorks2012; GO CREATE

DATABASE ENCRYPTION KEY WITH ALGORITHM = AES_128 ENCRYPTION BY SERVER CERTIFICATE

MyServerCert; GO ALTER DATABASE AdventureWorks2012 SET ENCRYPTION ON; GO

References: https://docs.microsoft.com/en-us/sql/relational-databases/security/encryption/transparent-data-encryption

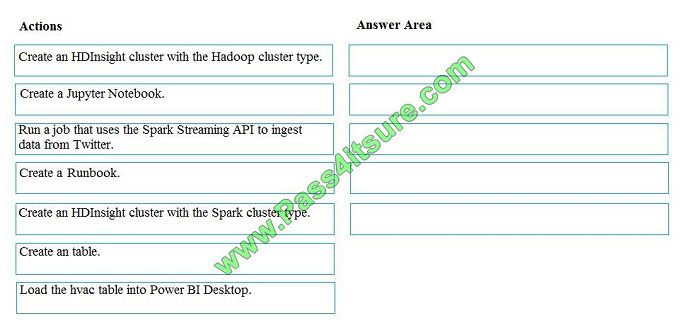

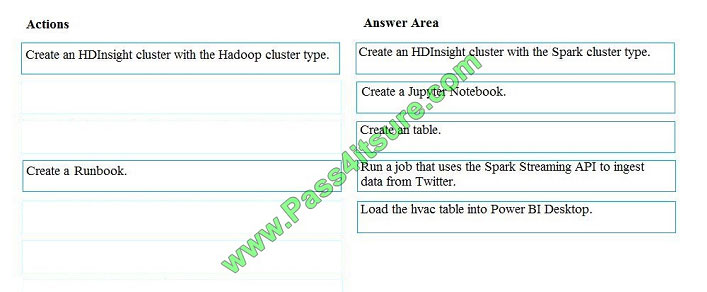

QUESTION 12

You develop data engineering solutions for a company.

A project requires analysis of real-time Twitter feeds. Posts that contain specific keywords must be stored and

processed on Microsoft Azure and then displayed by using Microsoft Power BI. You need to implement the solution.

Which five actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to

the answer area and arrange them in the correct order.

Select and Place:

Correct Answer:

Step 1: Create an HDInisght cluster with the Spark cluster type

Step 2: Create a Jyputer Notebook

Step 3: Create a table

The Jupyter Notebook that you created in the previous step includes code to create an hvac table.

Step 4: Run a job that uses the Spark Streaming API to ingest data from Twitter

Step 5: Load the hvac table into Power BI Desktop

You use Power BI to create visualizations, reports, and dashboards from the Spark cluster data.

References:

https://acadgild.com/blog/streaming-twitter-data-using-spark

https://docs.microsoft.com/en-us/azure/hdinsight/spark/apache-spark-use-with-data-lake-store

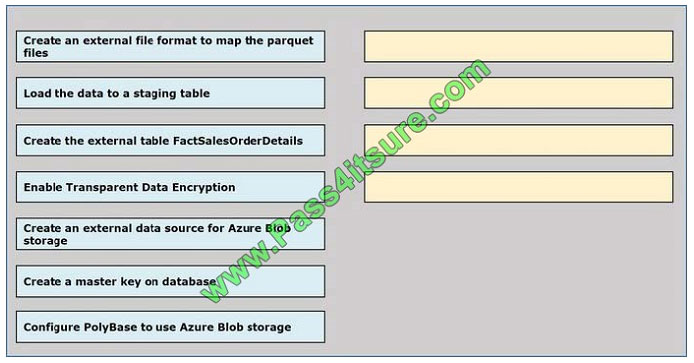

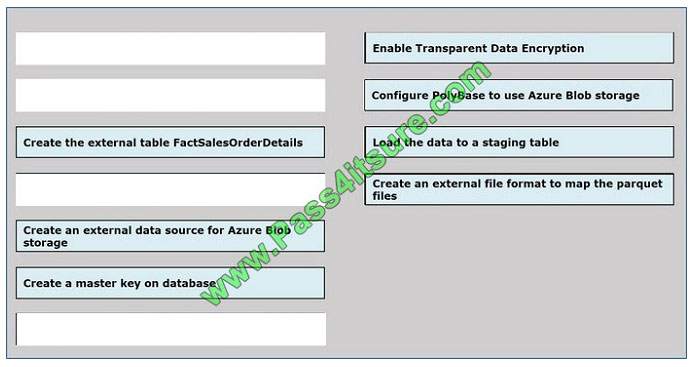

QUESTION 13

You are creating a managed data warehouse solution on Microsoft Azure.

You must use PolyBase to retrieve data from Azure Blob storage that resides in parquet format and toad the data into a

large table called FactSalesOrderDetails.

You need to configure Azure SQL Data Warehouse to receive the data.

Which four actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to

the answer area and arrange them in the correct order.

Select and Place:

Correct Answer:

Share Pass4itsure discount codes for free

The benefits of Pass4itsure!

Pass4itsure offers the latest exam practice questions and answers free of charge! Update all exam questions throughout the year,

with a number of professional exam experts! To make sure it works! Maximum pass rate, best value for money! Helps you pass the exam easily on your first attempt.

This maybe you’re interested

Summarize:

Get the full Microsoft Certifications DP-200 exam dump here: https://www.pass4itsure.com/dp-200.html (Q&As:86). Follow my blog and we regularly update the latest effective exam dumps to help you improve your skills!

Related

Discover more from Exampass: Collection of Cisco (CCNA, CCNP, Meraki Solutions Specialist, CCDP...) exam questions and answers from Pass4itsure

Subscribe to get the latest posts sent to your email.

Written by Ralph K. Merritt

We are here to help you study for Cisco certification exams. We know that the Cisco series (CCNP, CCDE, CCIE, CCNA, DevNet, Special and other certification exams are becoming more and more popular, and many people need them. In this era full of challenges and opportunities, we are committed to providing candidates with the most comprehensive and comprehensive Accurate exam preparation resources help them successfully pass the exam and realize their career dreams. The Exampass blog we established is based on the Pass4itsure Cisco exam dump platform and is dedicated to collecting the latest exam resources and conducting detailed classification. We know that the most troublesome thing for candidates during the preparation process is often the massive amount of learning materials and information screening. Therefore, we have prepared the most valuable preparation materials for candidates to help them prepare more efficiently. With our rich experience and deep accumulation in Cisco certification, we provide you with the latest PDF information and the latest exam questions. These materials not only include the key points and difficulties of the exam, but are also equipped with detailed analysis and question-answering techniques, allowing candidates to deeply understand the exam content and master how to answer questions. Our ultimate goal is to help you study for various Cisco certification exams, so that you can avoid detours in the preparation process and get twice the result with half the effort. We believe that through our efforts and professional guidance, you will be able to easily cope with exam challenges, achieve excellent results, and achieve both personal and professional improvement. In your future career, you will be more competitive and have broader development space because of your Cisco certification.

Categories

2025 Microsoft Top 20 Certification Materials

- Microsoft Azure Administrator –> az-104 dumps

- Microsoft Azure Fundamentals –> az-900 dumps

- Data Engineering on Microsoft Azure –> dp-203 dumps

- Developing Solutions for Microsoft Azure –> az-204 dumps

- Microsoft Power Platform Developer –> pl-400 dumps

- Designing and Implementing a Microsoft Azure AI Solution –> ai-102 dumps

- Microsoft Power BI Data Analyst –> pl-300 dumps

- Designing and Implementing Microsoft DevOps Solutions –> az-400 dumps

- Microsoft Azure Security Technologies –> az-500 dumps

- Microsoft Cybersecurity Architect –> sc-100 dumps

- Microsoft Dynamics 365 Fundamentals Customer Engagement Apps (CRM) –> mb-910 dumps

- Microsoft Dynamics 365 Fundamentals Finance and Operations Apps (ERP) –> mb-920 dumps

- Microsoft Azure Data Fundamentals –> dp-900 dumps

- Microsoft 365 Fundamentals –> ms-900 dumps

- Microsoft Security Compliance and Identity Fundamentals –> sc-900 dumps

- Microsoft Azure AI Fundamentals –> ai-900 dumps

- Microsoft Dynamics 365: Finance and Operations Apps Solution Architect –> mb-700 dumps

- Microsoft 365 Certified: Enterprise Administrator Expert –> ms-102 dumps

- Microsoft 365 Certified: Collaboration Communications Systems Engineer Associate –> ms-721 dumps

- Endpoint Administrator Associate –> md-102 dumps